Bot traffic: What it is and why you should care about it

Bots have become an integral part of the digital space today. They help us order groceries, play music on our Slack channel, and pay our colleagues back for the delicious smoothies they bought us. Bots also populate the internet to carry out the functions they’re designed for. But what does this mean for website owners? And (perhaps more importantly) what does this mean for the environment? Read on to find out what you need to know about bot traffic and why you should care about it!

What is a bot?

Let’s start with the basics: A bot is a software application designed to perform automated tasks over the internet. Bots can imitate or even replace the behavior of a real user. They’re very good at executing repetitive and mundane tasks. They’re also swift and efficient, which makes them a perfect choice if you need to do something on a large scale.

What is bot traffic?

Bot traffic refers to any non-human traffic to a website or app. Which is a very normal thing on the internet. If you own a website, it’s very likely that you’ve been visited by a bot. As a matter of fact, bot traffic accounts for almost 30% of all internet traffic at the moment.

Is bot traffic bad?

You’ve probably heard that bot traffic is bad for your site. And in many cases, that’s true. But there are good and legitimate bots too. It depends on the purpose of the bots and the intention of their creators. Some bots are essential for operating digital services like search engines or personal assistants. However, some bots want to brute-force their way into your website and steal sensitive information. So, which bots are ‘good’ and which ones are ‘bad’? Let’s dive a bit deeper into this topic.

The ‘good’ bots

‘Good’ bots perform tasks that do not cause harm to your website or server. They announce themselves and let you know what they do on your website. The most popular ‘good’ bots are search engine crawlers. Without crawlers visiting your website to discover content, search engines have no way to serve you information when you’re searching for something. So when we talk about ‘good’ bot traffic, we’re talking about these bots.

Other than search engine crawlers, some other good internet bots include:

- SEO crawlers: If you’re in the SEO space, you’ve probably used tools like Semrush or Ahrefs to do keyword research or gain insight into competitors. For those tools to serve you information, they also need to send out bots to crawl the web and gather data.

- Commercial bots: Commercial companies send these bots to crawl the web to gather information. For instance, research companies use them to monitor news on the market; ad networks need them to monitor and optimize display ads; ‘coupon’ websites gather discount codes and sales programs to serve users on their websites.

- Site-monitoring bots: They help you monitor your website’s uptime and other metrics. They periodically check and report data, such as your server status and uptime duration. This allows you to take action when something’s wrong with your site.

- Feed/aggregator bots: They collect and combine newsworthy content to deliver to your site visitors or email subscribers.

The ‘bad’ bots

‘Bad’ bots are created with malicious intentions in mind. You’ve probably seen spam bots that spam your website with nonsense comments, irrelevant backlinks, and atrocious advertisements. And maybe you’ve also heard of bots that take people’s spots in online raffles, or bots that buy out the good seats in concerts.

It’s due to these malicious bots that bot traffic gets a bad reputation, and rightly so. Unfortunately, a significant amount of bad bots populate the internet nowadays.

Here are some bots you don’t want on your site:

- Email scrapers: They harvest email addresses and send malicious emails to those contacts.

- Comment spam bots: Spam your website with comments and links that redirect people to a malicious website. In many cases, they spam your website to advertise or to try to get backlinks to their sites.

- Scrapers bots: These bots come to your website and download everything they can find. That can include your text, images, HTML files, and even videos. Bot operators will then re-use your content without permission.

- Bots for credential stuffing or brute force attacks: These bots will try to gain access to your website to steal sensitive information. They do this by trying to log in like a real user.

- Botnet, zombie computers: They are networks of infected devices used to perform DDoS attacks. DDoS stands for distributed denial-of-service. During a DDoS attack, the attacker uses such a network of devices to flood a website with bot traffic. This overwhelms your web server with requests, resulting in a slow or unusable website.

- Inventory and ticket bots: They go to websites to buy up tickets for entertainment events or to bulk purchase newly-released products. Brokers use them to resell tickets or products at a higher price to make profits.

Why you should care about bot traffic

Now that you’ve got some knowledge about bot traffic, let’s talk about why you should care.

For your website performance

Malicious bot traffic strains your web server and sometimes even overloads it. These bots take up your server bandwidth with their requests, making your website slow or utterly inaccessible in case of a DDoS attack. In the meantime, you might have lost traffic and sales to other competitors.

In addition, malicious bots disguise themselves as regular human traffic, so they might not be visible when you check your website statistics. The result? You might see random spikes in traffic but don’t understand why. Or, you might be confused as to why you receive traffic but no conversion. As you can imagine, this can potentially hurt your business decisions because you don’t have the correct data.

For your site security

Malicious bots are also bad for your site’s security. They will try to brute force their way into your website using various username/password combinations, or seek out weak entry points and report to their operators. If you have security vulnerabilities, these malicious players might even attempt to install viruses on your website and spread those to your users. And if you own an online store, you will have to manage sensitive information like credit card details that hackers would love to steal.

For the environment

Did you know that bot traffic affects the environment? When a bot visits your site, it makes an HTTP request to your server asking for information. Your server needs to respond, then return the necessary information. Whenever this happens, your server must spend a small amount of energy to complete the request. Now, consider how many bots there are on the internet. You can probably imagine that the amount of energy spent on bot traffic is enormous!

In this sense, it doesn’t matter if a good or bad bot visits your site. The process is still the same. Both use energy to perform their tasks, and both have consequences on the environment.

Even though search engines are an essential part of the internet, they’re guilty of being wasteful too. They can visit your site too many times, and not even pick up the right changes. We recommend checking your server log to see how many times crawlers and bots visit your site. Additionally, there’s a crawl stats report in Google Search Console that also tells you how many times Google crawls your site. You might be surprised by some numbers there.

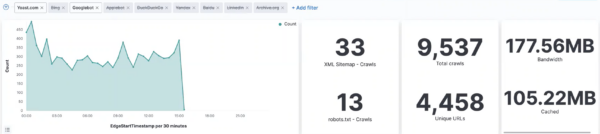

A small case study from Yoast

Let’s take Yoast, for instance. On any given day, Google crawlers can visit our website 10,000 times. It might seem reasonable to visit us a lot, but they only crawl 4,500 unique URLs. That means energy was used on crawling the duplicate URLs over and over. Even though we regularly publish and update our website content, we probably don’t need all those crawls. These crawls aren’t just for pages; crawlers also go through our images, CSS, JavaScript, etc.

But that’s not all. Google bots aren’t the only ones visiting us. There are bots from other search engines, digital services, and even bad bots too. Such unnecessary bot traffic strains our website server and wastes energy that could otherwise be used for other valuable activities.

What can you do against ‘bad’ bots?

You can try to detect bad bots and block them from entering your site. This will save you a lot of bandwidth and reduce strain on your server, which in turn helps to save energy. The most basic way to do this is to block an individual or an entire range of IP addresses. You should block an IP address if you identify irregular traffic from that source. This approach works, but it’s labor-intensive and time-consuming.

Alternatively, you can use a bot management solution from providers like Cloudflare. These companies have an extensive database of good and bad bots. They also use AI and machine learning to detect malicious bots, and block them before they can cause harm to your site.

Security plugins

Additionally, you should install a security plugin if you’re running a WordPress website. Some of the more popular security plugins (like Sucuri Security or Wordfence) are maintained by companies that employ security researchers who monitor and patch issues. Some security plugins automatically block specific ‘bad’ bots for you. Others let you see where unusual traffic comes from, then let you decide how to deal with that traffic.

What about the ‘good’ bots?

As we mentioned earlier, ‘good’ bots are good because they’re essential and transparent in what they do. But they can still consume a lot of energy. Not to mention, these bots might not even be helpful for you. Even though what they do is considered ‘good’, they could still be disadvantageous to your website and the environment. So, what can you do for the good bots?

1. Block them if they’re not useful

You have to decide whether or not you want these ‘good’ bots to crawl your site. Does them crawling your site benefit you? More specifically: Does them crawling your site benefit you more than the cost to your servers, their servers, and the environment?

Let’s take search engine bots, for instance. Google is not the only search engine out there. It’s most likely that crawlers from other search engines have visited you as well. What if a search engine has crawled your site 500 times today, while only bringing you ten visitors? Is that still useful? If this is the case, you should consider blocking them, since you don’t get much value from this search engine anyway.

2. Limit the crawl rate

If bots support the crawl-delay in robots.txt, you should try to limit their crawl rate. This way, they won’t come back every 20 seconds to crawl the same links over and over. Because let’s be honest, you probably don’t update your website’s content 100 times on any given day. Even if you have a larger website.

You should play with the crawl rate, and monitor its effect on your website. Start with a slight delay, then increase the number when you’re sure it doesn’t have negative consequences. Plus, you can assign a specific crawl delay rate for crawlers from different sources. Unfortunately, Google doesn’t support craw delay, so you can’t use this for Google bots.

3. Help them crawl more efficiently

There are a lot of places on your website where crawlers have no business coming. Your internal search results, for instance. That’s why you should block their access via robots.txt. This not only saves energy, but also helps to optimize your crawl budget.

Next, you can help bots crawl your site better by removing unnecessary links that your CMS and plugins automatically create. For instance, WordPress automatically creates an RSS feed for your website comments. This RSS feed has a link, but hardly anybody looks at it anyway, especially if you don’t have a lot of comments. Therefore, the existence of this RSS feed might not bring you any value. It just creates another link for crawlers to crawl repeatedly, wasting energy in the process.

Optimize your website crawl with Yoast SEO

Yoast SEO has a useful and sustainable new setting: the crawl optimization settings! With over 20 available toggles, you’ll be able to turn off the unnecessary things that WordPress automatically adds to your site. You can see the crawl settings as a way to easily clean up your site of unwanted overhead. For example, you have the option to clean up the internal site search of your site to prevent SEO spam attacks!

Even if you’ve only started using the crawl optimization settings today, you’re already helping the environment!

Read more: SEO basics: What is crawlability? »